Stable AI, the startup behind the text-to-image AI system, is making a major effort to apply Stable Diffusion AI to the frontiers of biotech. Called OpenBioML, the effort’s first projects focus on machine learning-based DNA sequencing, protein folding and computational biochemistry.

The company’s founders describe OpenBioML as an “open research laboratory” — and it aims to explore the intersection of AI and biology in a way where students, practitioners and researchers can participate and collaborate, said Imad Mostak, CEO of Stality AI.

“OpenBioML is one of the independent research communities that support stability,” Mostaque told TechCrunch in an email interview. “Stability looks to develop and democratize AI, and through OpenBioML, we see an opportunity to advance the state of the art in science, health, and medicine.

Given the controversy surrounding Stable Diffusion—Stability AI’s AI system that generates art from textual expressions, like OpenAI’s DALL-E 2—one might be wary of Stable AI’s debut in healthcare. The startup allowed developers to use the system however they wanted, taking a laissez-faire approach to management, including deep celebrity fakes and pornographic images.

Calm AI in Ethically Questionable Decisions To this day, machine learning in medicine is explosive. While the technology has been used successfully to diagnose conditions such as skin and eye diseases, studies show that algorithms can develop biases that result in worse care for some patients. An April 2021 study, for example, found that statistical models used to predict suicide risk in mental health patients performed well for white and Asian patients but poorly for black patients.

OpenBioML is starting in safe territory, wise. His first projects are:

- BioLMSeeking to apply natural language processing (NLP) techniques to the fields of computational biology and chemistry

- DNA-distributionIt aims to develop an AI that can generate DNA sequences from text queries

- Libre FoldIt seems to increase the accessibility of AI protein structure prediction systems similar to DeepMind’s AlphaFold 2

Each project is led by independent researchers, but Stability AI is providing support through access to an AWS-hosted cluster of more than 5,000 Nvidia A100 GPUs to train AI systems. According to Niccolò Zanicelli, a computer science undergraduate at the University of Parma and one of the main researchers OpenBioML, it will. Enough processing power and storage to eventually train up to 10 different Alphafold 2-like systems in parallel.

“A lot of computational biology research already leads to open source releases. However, most of it happens at the single lab level and is therefore often limited by insufficient computational resources,” Zanichelli told TechCrunch in an email. “We want to change this by encouraging large-scale collaboration, and thanks to stable AI support, support collaboration with resources that only these large industrial laboratories use. .”

Generating DNA sequences

of OpenBioML’s ongoing projects; DNA-distribution – led by pathology professor Luca Pinello in the laboratory at Massachusetts General Hospital and Harvard Medical School – is perhaps too ambitious. The goal is to use generative AI systems to learn and apply the rules of DNA “control” sequences, or parts of nucleic acid molecules that influence the expression of certain genes in the body. Many diseases and disorders are the result of misregulated genes, but science has not yet found a reliable process to identify these regulatory sequences.

DNA-Diffusion proposes using an AI system known as a diffusion model to generate cell-type-specific DNA sequences. Diffusion models – which support image generators such as Stable Diffusion and OpenAI’s DALL-E 2 – create new data by learning how to destroy and recover many existing data samples (e.g. DNA sequences). When feeding the samples, the models are better able to recover all the information they previously destroyed in order to create new ones.

Image Credits: Open BioML

“Diffusion has been widely successful in multimodal generative models, and is now being applied in computational biology, for example, to the creation of new protein structures,” Zanichelli said. At DNA-Distribution we are now investigating its application to genomic sequences.

If all goes according to plan, the DNA-propagation project will create a propagation model that can generate a sequence of DNA sequences with written instructions such as “the sequence that activates a gene to its highest level of expression in cell type X” and “can activate the gene in the liver and heart, but not the brain.” Not in. Such a model can help to interpret the components of regulatory sequences, Zanichelli says – improving the scientific community’s understanding of the role of regulatory sequences in various diseases.

It’s important to note that this is mostly theoretical. While preliminary research on applying diffusion to protein folding looks promising, Zanichelli admits it’s very early days — hence the push to involve the broader AI community.

Predicting protein structures

OpenBioML’s LibreFold, while small in size, is likely to bear fruit quickly. The project seeks to gain a better understanding of machine learning systems in addition to ways to optimize protein structures.

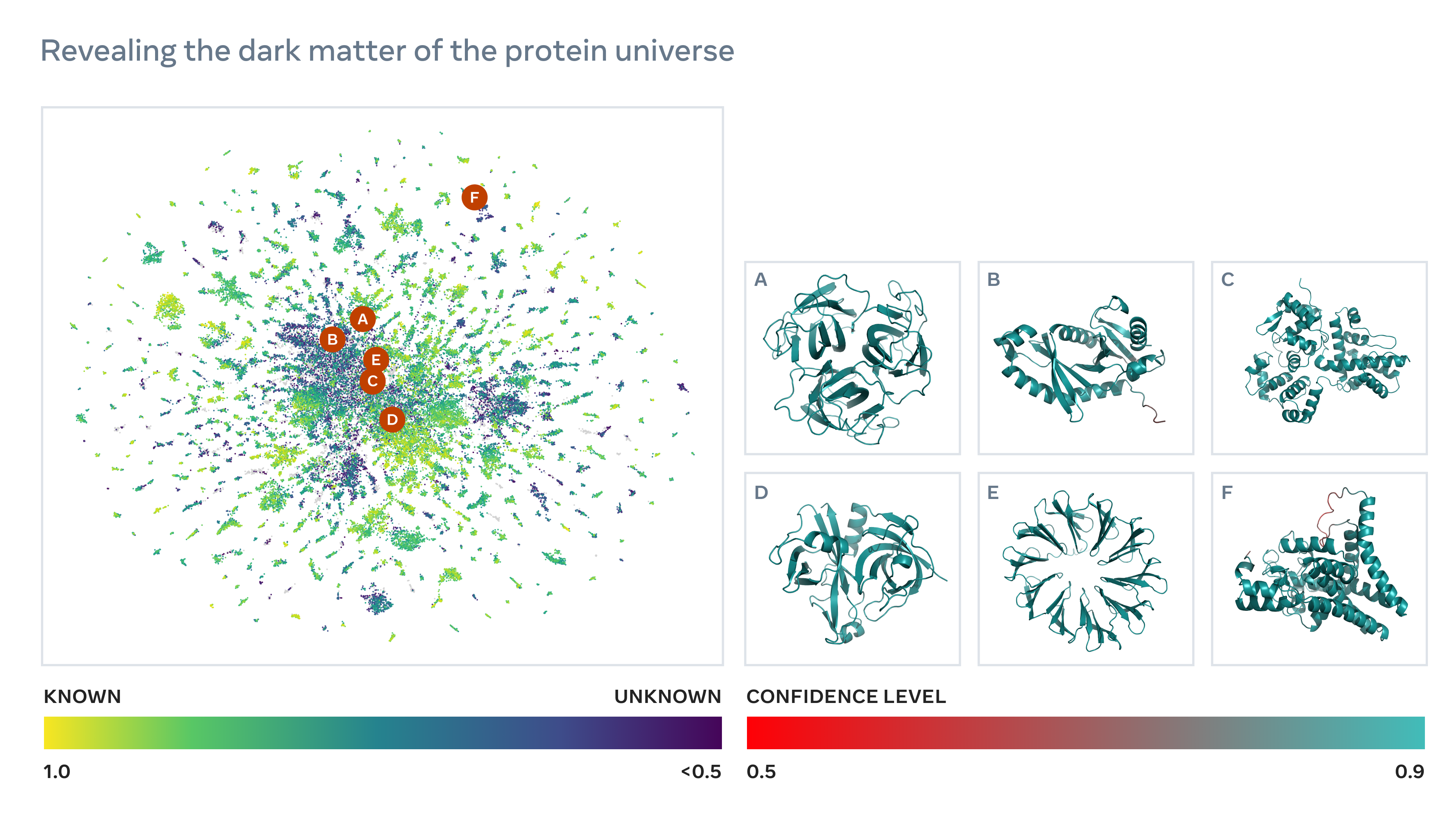

As my colleague Devin Coldaway covered in his post about DeepMind’s work on Alpha Fold 2 , AI systems that can accurately predict protein shape are relatively new on the scene, but dynamic in their capabilities. Proteins are made up of sequences of amino acids that fold into shapes to perform different functions in living organisms. Determining what shape an acid sequence forms was once a tedious, error-prone task. AI systems like AlphaFold 2 have changed that; Thanks to them, more than 98% of the protein structures in the human body are known to science today, as well as hundreds of thousands of others such as E. Other structures are known in organisms such as coli and yeast.

Few teams have the engineering expertise and resources needed to develop this type of AI, though. DeepMind was trained on Google’s expensive AI accelerator hardware, AlphaFold 2 tensor processing units (TPUs). And acid sequence training datasets are often proprietary or released under non-commercial licenses.

Proteins fold into their three-dimensional structure. Image Credits: Christoph Burgstedt/Science Photo Library/Getty Images

“This is unfortunate, because if you look at what the community was able to build on the Alphafold 2 checkpoint released by DeepMind, it’s simply incredible,” Zanichelli said, referring to the trained Alphafold 2 model released by DeepMind last year. . “For example, a few days after its release, Seoul National University professor Minkyung Baek reported on Twitter a trick that allowed the model to predict quaternary structures — something few, if any, expected the model to be capable of. There are many more examples of this, so who knows what the wider scientific community could build with the ability to train completely new alpha-fold-like protein structure prediction methods?”

Building on the work of RoseTTAFold and OpenFold, two ongoing community efforts to replicate AlphaFold 2; LibreFold facilitates “large-scale” experiments with various protein folding prediction systems. Led by researchers at University College London, Harvard and Stockholm, LibreFold’s focus will be on gaining a better understanding of what the systems can do and why, Zanichelli says.

“Libre Fold is at its heart a community, community project. The release of both model checkpoints and datasets is the same as it may take only a month or two to start rolling out the first offerings. “That said, my thinking is that the former is more likely.”

Applying NLP to Biochemistry

The horizon is longer. OpenBioML’s “Applying linguistic modeling techniques from NLP to biochemical sequences” is a BioLM project with a vague mission. In collaboration with EleutherAI, a research group that has released several open-source text-generating models, BioLM hopes to train and publish new “biochemical language models” for a variety of tasks, including generating protein sequences.

Zanichelli points to Salesforce’s ProGen for the kinds of jobs that BioElm can start. Progene treats amino acid sequences as words in a sentence. Trained on a database of more than 280 million protein sequences and associated metadata, the model predicts the next set of amino acids from its predecessors, much like a language model predicts the end of a sentence from the beginning.

Nivea earlier this year released a language model called Megamolbart, which is trained on a dataset of millions of molecules to predict drug targets and chemical reactions. Meta also recently trained an NLP called ESM-2 on protein sequences, an approach the company says allowed it to predict the sequences of more than 600 million proteins in two weeks.

Protein structures predicted by metasystems. Image Credits: Meta

look forward

While OpenBioML’s interests are broad (and expanding), Mostaque follows the tradition of open research in science and medicine, united by a desire to “maximize the positive potential of machine learning and AI in biology.”

“We’re looking to enable researchers to have more control over their experimental pipeline for active learning or model validation purposes,” continued Mostak.

But — as might be expected from a VC-backed startup that recently raised more than $100 million — Serenity AI doesn’t see OpenBioML as just a charity. Mostaque said the company is open to testing ad technology from OpenBioML “when it’s advanced and secure enough, and when the time is right.”